Introduction

English as a Foreign Language (EFL) writing assessment is essential to language learning and teaching. Accurate and reliable assessment results help learners and educators track progress, identify strengths and weaknesses, and make informed decisions about instructional strategies (Swiecki et al., 2022). Traditional EFL writing assessment relies on human evaluators, which can be time-consuming, expensive, and vulnerable to human bias (Swiecki et al., 2022). However, with the rapid growth of artificial intelligence (AI) technology, it is now possible to create intelligent systems capable of providing reliable and efficient language assessment. AI-based assessment tools can revolutionise EFL assessment, offering new insights into language learning and teaching.

The integration of AI in writing assessment may enhance teaching and learning by providing more personalised and adaptive learning experiences for students. However, there are several research gaps regarding the effective use of AI in writing assessment, particularly in the context of formative assessment. In Algeria, teachers struggle with providing accurate formative assessment for students’ writing performance because it is highly time-consuming and laborious. While the use of AI-based assessment provides effective feedback and detailed descriptions of students’ writing, it is not yet popular among teachers. For this reason, there is a need to explore teachers’ attitudes towards these new tools and their potential to support effective formative writing assessment.

This study addresses the following research questions:

-

How do teachers perceive the impact of AI-based assessment methods on formative assessment in EFL writing instruction?

-

To what extent are AI-based assessment tools perceived to enhance the effectiveness of formative assessment practices in EFL writing instruction?

1. Review of Literature

Traditional methods of assessing students’ writing performance are highly time- and effort-consuming, reflecting the old paradigm in education (Wolf et al., 2019; Vittorini et al., 2021). These approaches often involve manually grading assignments, exams, or essays, which can be time-consuming, especially for large classes. Additionally, providing individualised feedback to each student can require a considerable amount of effort. Thus, there is growing interest in AI-based educational assessment due to its ability to address the flaws associated with traditional forms of assessment, including time and effort consumption, lack of effectiveness and accuracy, and bias (Gardner et al., 2021). The new forms engage students in their studies, providing them with a better sense of self-regulation.

Several studies explore the role of AI in supporting formative writing assessment (e.g., Wolf et al., 2019; Hampton, 2021; Swiecki et al., 2022; Holmes et al., 2022). In an article entitled “Artificial Intelligence to Enhance Formative Assessment of Student Writing”, Wolf et al. (2019) discuss successful implementation of artificial intelligence (AI) to enhance the formative assessment of student writing. The AI system was utilised to deliver automatic feedback to students, assisting them in improving the quality of their writing while lowering the time and effort required by instructors. The authors highlight the benefits of using AI in formative assessment, such as providing immediate feedback, tracking students’ progress over time, and reducing grading bias. The study found that the AI system had a positive impact on students’ writing skills and was well-received by both students and instructors. Vittorini et al. (2021) also highlight the benefits of AI systems, noting that they have the potential to effectively address and diminish the amount of time and effort needed in assessing students’ writing. They identify three benefits of using AI systems in improving learners’ writing performance:

-

Minimising correction times and reducing errors

-

Providing automated feedback to support students in solving exercises assigned during the course

-

Improving learning outcomes of students who use the system as a formative assessment tool.

AI-based educational assessment is gaining popularity because of its capacity to boost the efficiency and precision of evaluations (Gardner et al., 2021). Holmes et al. (2022) maintain that AI-based writing assessment not only assists teachers in correcting assignments more efficiently but also helps students receive instant feedback, which can lead to improvements in their solutions before submitting their final work for summative assessment. In addition to the above strengths of AI assessment systems, Swiecki et al. (2022) criticise traditional assessment paradigms for the onerous nature of assessment design and implementation, the limited scope of performance snapshots, the uniformity that does not account for the individual skills and backgrounds of participants, and inauthenticity. They maintain that AI-based assessment addresses these issues and provides nuanced views of learning.

Providing nuanced accounts of learning rather than mere snapshots of students’ writing performance is what learners need to develop their skills. Writing is considered the most complex skill in EFL contexts; hence, it needs rich feedback and practice. Teachers cannot assess every piece of writing and give rich and personalised feedback that benefits learners, particularly if they teach large classes, as in the case of higher education. Overall, AI-based writing assessments can be suitable for formative writing assessment, as they can support learners and track their progress continuously without time or place constraints.

2. Defining AI

In 1956, the term “artificial intelligence” was introduced during a workshop held at Dartmouth College (Holmes et al., 2022). Holmes et al. maintain that since its inception, the field of artificial intelligence (AI) has undergone cycles of intense interest and ambitious prognostications, interspersed with periods known as “AI winters” characterised by the failure of these lofty predictions to come to fruition, resulting in a severe reduction in funding for AI research. The cycles of intense interest and ambitious predictions,followed by periods of “AI winters,” reflect the complex nature and limitations of AI. On the one hand, the grand predictions about AI reflect the potential and promise of this field in developing intelligent systems that can perform complex tasks, learn from data, and make decisions with minimal human intervention (Holmes et al., 2022). These predictions have fuelled interest and investment in AI research over the years. On the other hand, the cycles of AI winters imply that these lofty estimates are not always achieved, leading to a significant decline in funding and interest in the field (ibid).

This observation emphasises the importance of accurately defining AI and its capabilities, developing realistic expectations about what AI can and cannot achieve, and addressing the technological and ethical difficulties that arise as AI becomes more prevalent worldwide. It is challenging to provide a precise and universal definition of AI. An attempt was made by McCarthy, in 1955, who defined it as “the science and engineering of making intelligent machines” (Stanford University, 2020). Russell and Norvig (2020, p. 2) have analysed various definitions of artificial intelligence (AI) from eight distinct sources and have identified four broad categories based on the underlying patterns of behaviour and thinking. The identified categories are (1) systems that think like humans, (2) systems that think rationally, (3) systems that act like humans, and (4) systems that act rationally. This categorisation highlights that the definitions of AI primarily aim to capture two fundamental traits, namely thinking and behaviour.

UNICEF’s definition of AI appears to encompass all the key traits associated with artificial intelligence. According to UNICEF (2021), AI refers to machine-based systems that make predictions, recommendations, or decisions based on human-defined objectives (p. 16). These systems can interact with humans and affect real or virtual environments directly or indirectly (UNICEF, 2021, p. 16). Moreover, AI systems can operate autonomously, and their behaviour can adapt to changing contexts through learning (ibid). UNICEF’s definition provides a comprehensive understanding of AI, highlighting its diverse capabilities and potential impact on society. It also highlights an important aspect of AI, namely learning. AI systems are pre-programmed to interact with humans and learn from this interaction to improve their abilities.

3. Methodology

The data for this study were collected through an online survey using a questionnaire. The questionnaire was designed to explore the perceptions of EFL teachers of the impact of AI-based assessment methods on formative assessment in EFL writing instruction. The questionnaire was administered to a sample of fifteen EFL teachers from different universities. Participation was voluntary and informed consent was obtained from all participants. No personally identifiableinformation was collected.

The questionnaire comprised thirteen (13) questions organised into four sections. The first section gathered demographic information about the participants, such as age, gender, and level of education. The second section aimed to gauge the participants’ perceptions of AI-based assessment methods and their potential impact on EFL writing instruction. The third section assessed the effectiveness of AI-based assessment tools in providing feedback and improving formative assessment practices. Finally, the fourth section included open-ended questions that allowed participants to elaborate on their responses and express further views on the topic. The questionnaire was reviewed by two subject-matter experts to establish content validity; internal consistency was estimated for multi-item scales (Cronbach’s α = 0.83).

The questionnaire was designed to be self-administered and completed online. It included open-ended questions to allow for a more in-depth investigation of the participants’ perceptions and experiences.

The questionnaire data were analysed quantitatively and qualitatively. The quantitative data were analysed using descriptive statistics to summarise the responses to each question. The qualitative data were analysed using content analysis to identify themes and patterns in the participants’ responses.

The use of an online survey as a data-gathering tool has significant drawbacks, such as the possibility of response bias and the inability to clarify participants’ responses. The researchers, however, took steps to mitigate these constraints by ensuring that the questionnaire was straightforward and easy to interpret.

4. Results and Discussion

A questionnaire with four sections was completed by fifteen EFL writing instructors, providing demographic information, perceptions and the perceived effectiveness of AI-based assessment methods, and open-ended responses. Most participants were between 24–50 years old, with an average of 8 years’ experience, and held a Master’s degree in English.

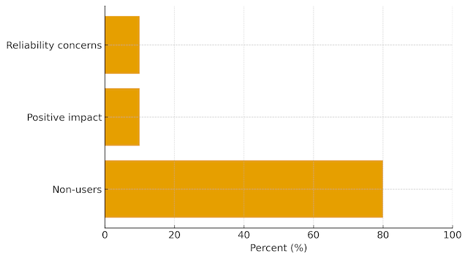

Figure 1. Perception of AI-Based Assessment Methods (All Respondents)

Sample: n = 15; Percentages refer to all respondents. Source: Authors’ survey (2025). Note: Totals may not sum to 100% due to rounding.

As shown in Figure 1, 80% of all respondents had not used AI-based assessment methods in their EFL writing classes, while 10% of all respondents reported positive impacts on formative assessment practices and 10% expressed concerns about reliability.

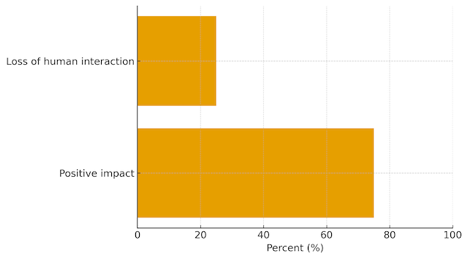

Figure 2. Perceptions among Non-Users of AI-Based Assessment Methods

Sample: non-users (n = 12; 80% of total n = 15); Percentages refer to non-users only. Source: Authors’ survey (2025). Note: Totals may not sum to 100% due to rounding.

For those who had not used AI-based assessment methods, 75% believed they could have a positive impact, while 25% expressed concerns about the loss of human interaction. These findings indicate a potential gap in the integration of technology in EFL writing instruction in Algerian universities.

Advantages of using AI-based assessment methods included providing more efficient and objective feedback, identifying patterns, and reducing workload, while disadvantages included a lack of accuracy and detailed feedback, and the loss of human interaction. Regarding AI-based assessment tools, 80% of participants had not used them, with 15% of users reporting enhanced effectiveness of formative assessment practices, while 5% of users expressed concerns about complexity (see Figure 4).

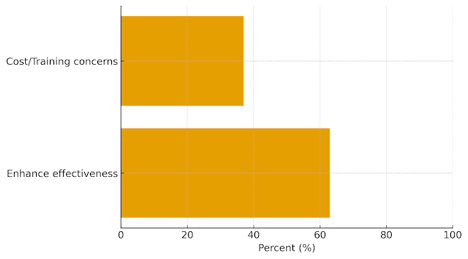

Figure 3. Perceived Effectiveness among Non-Users of AI-Based Assessment Tools

Sample: non-users (n = 12; 80% of total n = 15); Percentages refer to non-users only. Source: Authors’ survey (2025). Note: Totals may not sum to 100% due to rounding.

For those who had not used AI-based assessment tools, 63% believed they could enhance effectiveness, while 37% expressed concerns about cost and the need for training and support. These results demonstrate that Algerian EFL teachers are aware of the positive impact of AI-based assessment in providing formative writing assessment, but they are also aware of the drawbacks of these tools (see Figure 3).

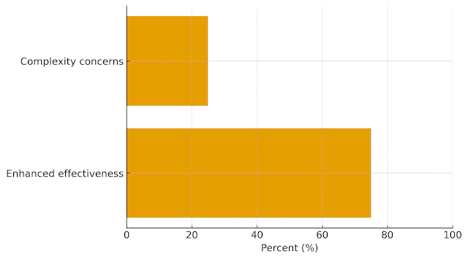

Figure 4. Reported Effectiveness among Users of AI-Based Assessment Tools

Sample: users (n = 3; 20% of total n = 15); Percentages refer to users only. Source: Authors’ survey (2025). Note: Percentages (75%/25%) are derived from overall shares reported (15%/5% of total n) with users = 20% of respondents; totals may not sum to 100% due to rounding.

Main features of effective AI-based assessment tools included accuracy, ease of use and integration, flexibility, and compatibility. Main challenges of implementing AI-based assessment methods/tools included concerns about accuracy and reliability, the need for training and support, and potential resistance to traditional assessment methods. Participants suggested gradual integration with simple tasks and proper training, and indicated that human supervision is still necessary in the process of assessment.

The results suggest that there is potential for AI-based assessment methods and tools to enhance formative assessment practices in EFL writing instruction, but their integration should be approached with caution and proper consideration of potential benefits and drawbacks.

Conclusion

Our study shed light on teachers’ perceptions of the impact of AI-based assessment methods on formative assessment in EFL writing instruction. AI has the potential to revolutionise EFL assessment, offering new insights into language learning and teaching. AI-based assessment tools can provide efficient, reliable, and personalised feedback on language skills, helping learners track progress and identify areas for improvement. However, there are potential challenges and ethical considerations that need to be addressed to ensure that AI-based assessment tools are fair, accurate, and beneficial for all learners. As AI technology evolves, we are likely to see further developments in AI-based assessment in EFL, with implications for language learning and teaching.